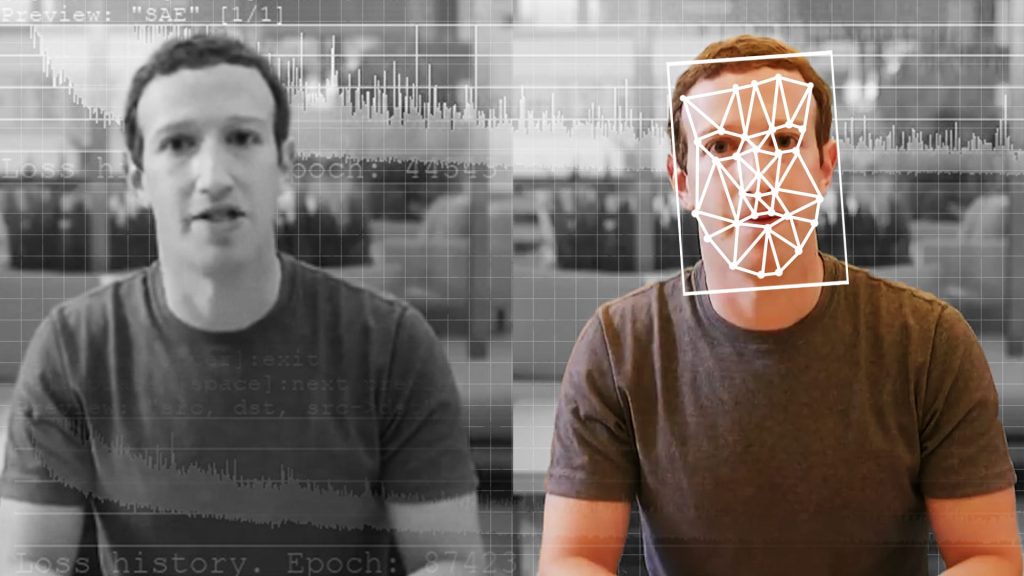

The technique (Deepfake) is the most disturbing in the field of artificial intelligence to be used in the crime and exploitation, according to a new report from the University College London, Deeb Vic (Deepfake) is a technique of artificial intelligence techniques which are used to produce a video or modification to its content completely.

Review something that wasn’t there in the first place, as the London College research team first identified 20 different ways criminals use AI over the next 15 years, then asked 31 AI experts to classify them by risk, based on potential harm, money they could earn, and ease. Use it and how difficult it is to stop criminals.

Why use Deepfake technology?

Deepfake technology used by criminals to create artificial intelligence videos of real people saying unreal things has ranked first for two main reasons:

- The first: It is difficult to know who created the clips and determine their identity, as automatic detection methods are still not reliable, and (Deepfake) technology is improving in deceiving human eyes, as a recent competition on Facebook to discover it using algorithms led researchers to admit that it is a big problem. Is solved.

- Second: Deepfake technology can be used in a variety of crimes and misdeeds, ranging from defaming public figures to obtaining money from the public by impersonating people. Many fake videos have been spread widely in various fields around the world, and Deepfake technology has helped criminals steal millions of dollars.

What are the concerns about using Deepfake technology?

Hence, researchers and smart technology specialists fear that this technology will make people distrust audiovisual evidence, as this will cause great societal harm. The specialists pointed out that the more our life on the Internet increases the risks, and unlike many traditional crimes, crimes in the digital world can easily be shared, repeated, as well as sold, which allows criminal technologies to be marketed and crime presented as a service, and this means that criminals may be able to use sources. External in the most challenging aspects of their AI-based crime.

- Driverless vehicles as weapons

- Phishing

- Online data collection for extortion

- Attacks on systems controlled by artificial intelligence

- Fake news

Burglar bots technique:

But researchers were not much concerned about the technology (burglar bots) that can enter homes through message boxes and cat boards, as it can transmit a lot of information about the house to thieves, and whether there is anyone in the house. They also classified AI-assisted stalking as a crime of less concern, although it is also harmful to victims; Because it cannot work on a large scale.

As researchers were more afraid of the risks of (Deepfake) technology, because this technology has been grabbing the headlines since the term appeared on (Reddit) in 2017, but few concerns have been realized so far, however, researchers believe that it is Things are set to change as technology evolves, and deepfakes are becoming more and more accessible.

![The Top & Most Popular Seafood Bucket Restaurants in Dubai for you [Never Miss]](https://uae24x7.com/wp-content/uploads/2020/09/8-seafood-in-a-bucket-scaled-e1600739237403.jpg)

![Procedures for Renewing the Driving License in Abu Dhabi [3 Simple Steps]](https://uae24x7.com/wp-content/uploads/2020/07/Capture-9-e1595666454466.jpg)